One big idiotic promise is to replace all actual AI work with just generating some garbage using an off the shelf LLM architecture, going as far as an example in this very thread (generating chemical formulas as sequences of characters with an LLM, as if it was ever likely to work even OK considering how the underlying problem is in 3D and not in fact a linear sequence, and how a million training samples is actually a lot less than a trillion).

Hey, is that a reference to one of my posts?

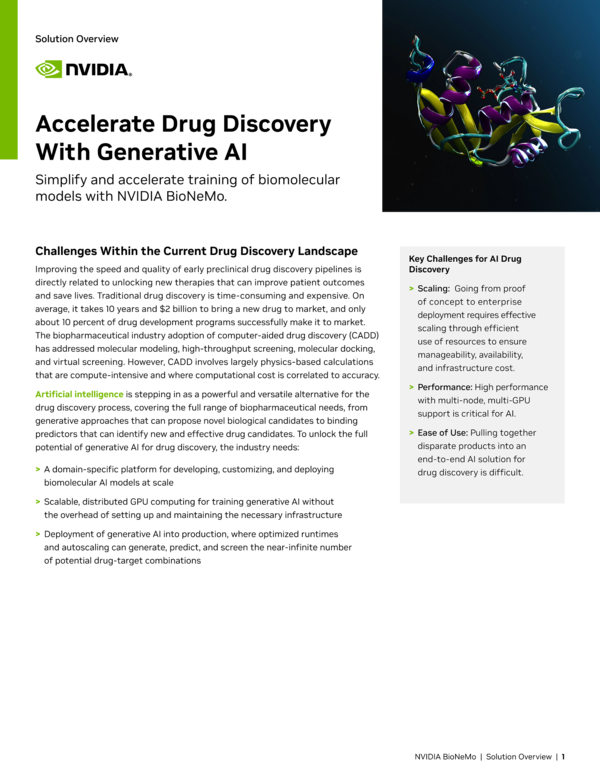

I’m not an expert so clearly I can be wrong. I brought up BIONEMO, and I’m not sure you can call it off the shelf, given it was trained specifically on protein sequences and not random text.

resources.nvidia.com

Meaning it shouldn’t have garbage data, for example.

The function of the model isn’t 3D, per se, but the statistically most likely relationships between various atoms in a sequence. However because the database is known good, then the relationships capture the most likely 3D arrangements, not that the model ever infers that information.

P4O6 and P4O10 are 2D representation of a 3D structure:

en.m.wikipedia.org

en.m.wikipedia.org

Molar mass calculator computes molar mass, molecular weight and elemental composition of any given compound.

www.webqc.org

Molar mass calculator computes molar mass, molecular weight and elemental composition of any given compound.

www.webqc.org

Because it’s 3D structure preclude certain arrangements, such as P3O3, then the database should never have that as part of the training set. So when a ‘autocomplete’ step suggests a PO compound it should be an existing one and most likely to be found in a sequence given the surrounding sequence.

The 3D structure isn’t

computed by an LLM, but assumed given the training data.

Obviously a better solution may exist than using an LLM. It is in fact shoehorning a problem into a solution, as opposed to creating a solution to a problem.

Diffusion models might be better, since as you say the problem space is inherently 3

Structure-based generative chemistry is crucial in computer-aided drug discovery. Here, authors propose PMDM, a conditional generative model for 3D molecule generation tailored to specific targets. Extensive experiments demonstrate that PMDM can effectively generate rational bioactive molecules

www.nature.com

Nonetheless, these methods typically represent the molecules as SMILES strings (1D) or graphs (2D), neglecting the crucial 3D-spatial information that is crucial to determine the properties of molecules.

So while both LLM and stable diffusion use (pardon my imprecise language) latent space to sort concepts by similarity, stable diffusion has the benefit of connecting a text prompt (such as a chemical formula) with a multiple dimension output (a picture generally has xy axis plus three color values, which is itself a vector into a color space, which means a picture is a 3D object)

You don’t have to use an image model with diffusion, and as I read more NVIDIA’s BioNemo also supports diffusion:

docs.nvidia.com

The Score model is a 3-dimensional equivariant graph neural network that has three layers: embedding, interaction layer with 6 graph convolution layers, and output layer. In total, the Score model has 20M parameters. The Score model is used to generate a series of potential poses for protein-ligand binding by running the reverse diffusion process

EquiDock tries to solve for 3D structures using a graph network:

docs.nvidia.com

My point was never that LLMs are the best solution for any given problem. It was that LLMs have useful properties that can be used, and will be replaced when other tools are developed that are faster, cheaper, or more useful. Generating potential sequences that

seem reasonable is a first step. Using other tools to evaluate them, including up to modeling, synthesis, and verifying biological activity, have to be applied because an LLM, or any tool, in itself is insufficient.