God I hate it so much. We shifted up a major version, and the IDE has less functionality than the previous version, to the point where I'll use the old one for reviewing/exploring source.Uniface?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What’s the oldest, weirdest, nastiest, or most unusual language you’ve ever coded in?

- Thread starter CommanderJameson

- Start date

More options

Who Replied?The last thing I did at my previous job was migrate a big legacy app from Uniface 9 to 10. I liked some things about the new IDE but it definitely felt unfinished.God I hate it so much. We shifted up a major version, and the IDE has less functionality than the previous version, to the point where I'll use the old one for reviewing/exploring source.

VAL-2, a lisp-like language on a unimate robotic controller that I played with at Honeywell during highschool. The engineers working with me were creating a vision recognition system for the airforce on their Classic Mac computers running Smalltalk.

The controller and, thus, the language were optimized for recursion.

The controller and, thus, the language were optimized for recursion.

It is vendor-specific syntactic sugar. But at least it only grosses up your code in the parts that deal with sql. The rest of your code is just passed through as is.probably pro*c

The things you forget... another entry in the 'obscure coding history' sweepstakes...

My first official IT job was at a college (in the early 90s) supporting Admin users running all sorts of things on the local VAX cluster. Somehow (I forget the details) I was given the task of finding a way to track calls to the help desk.

Something there wasn't a budget for (except my time) (I'm sure there was something out there that did that but likely wasn't free, and the academic IT support side of the house (student and faculty IT support) ran on Unix and had a separate budget... and did their own thing, and the head of Admin IT didn't get along with Academic IT though the 2 departments later merged... after I left)

But somehow this college had an unlimited license for DEC's ALL-IN-1 software (No idea how that happened... but we were in New England so maybe somebody knew somebody) and my job included managing/supporting it.

And you could write "applications" in the scripting language built into ALL-IN-1... so I did. It didn't turn out too badly IMO. It was usable at least. At least until I moved on to greener pastures.

My first official IT job was at a college (in the early 90s) supporting Admin users running all sorts of things on the local VAX cluster. Somehow (I forget the details) I was given the task of finding a way to track calls to the help desk.

Something there wasn't a budget for (except my time) (I'm sure there was something out there that did that but likely wasn't free, and the academic IT support side of the house (student and faculty IT support) ran on Unix and had a separate budget... and did their own thing, and the head of Admin IT didn't get along with Academic IT though the 2 departments later merged... after I left)

But somehow this college had an unlimited license for DEC's ALL-IN-1 software (No idea how that happened... but we were in New England so maybe somebody knew somebody) and my job included managing/supporting it.

And you could write "applications" in the scripting language built into ALL-IN-1... so I did. It didn't turn out too badly IMO. It was usable at least. At least until I moved on to greener pastures.

Last week, I learned that the Fortran language standard also specifies a textual key-value initialization/serialization format, akin to the role of YAML or JSON in a lot of modern systems. The

namelist format is not implemented as some sort of library, but rather access to such files is integrated directly into the read and write directives, that have a namelist-specific mode of operations activated by passing an optional named parameter nml as an argument to the calls. I need to double check, but the recent error that led me to look into this makes me think that different Fortran compilers are inconsistent in how they implement it too, much like their conformance with the rest of the Fortran standard.It's not that it's harder in C to return in multiple places, all the underlying housekeeping is taken care of for you so there is nothing to "do" but type return wherever you want (has been that way in my experience for a very long time), it's just to prevent confusion. It's similar to the guidance of not using goto.

/tangent

As mentioned previously, the problem is with any cleanup that you have to do... closing file handles, releasing memory properly, etc. If you return from multiple places, you have to make sure that you have cleanup in multiple places or else you'll leak resources. Fortunately, modern languages with various semantics help with this... 'with' statements, automatic destructor calling, etc. no matter where/when you 'return'.

A handful of times I've done this in C with a macro that checks what was opened/allocated and cleans up before returning, but it doesn't look professional, it just looks like I was trying to be cute.As mentioned previously, the problem is with any cleanup that you have to do... closing file handles, releasing memory properly, etc. If you return from multiple places, you have to make sure that you have cleanup in multiple places or else you'll leak resources. Fortunately, modern languages with various semantics help with this... 'with' statements, automatic destructor calling, etc. no matter where/when you 'return'.

Code:

#define RETURN(x) do {if (fd != NULL) fclose﹙fd﹚; if (ptr != NULL) free(ptr); return (x);} while (0)VERY early on I did some cutesy things... had someone complain about it so I stopped. I'd write loops (in C) without a code block, using commas (statement separators) and only one semi (statement terminator), like this:A handful of times I've done this in C with a macro that checks what was opened/allocated and cleans up before returning, but it doesn't look professional, it just looks like I was trying to be cute.

Code:

for (int index = 0; index < somval; index++)

stmt,

stmt,

stmt;The main problem is that it's one code line so when debugging, one step executes all the statements, can't see what goes on in the middle.

My favorite cutesie macro is something that returns true or false depending on whether the argument is a array or pointer. Not posting, leaving it as code golf. (And if anyone takes this up, please spoilerize your answers)

That works great until someone finds a reason to release some of those resources early. The act of releasing the resource typically doesn't also zero out the handle to it, but just leaves it as a dangling reference. If you're very lucky, the code crashes immediately on the double-A handful of times I've done this in C with a macro that checks what was opened/allocated and cleans up before returning, but it doesn't look professional, it just looks like I was trying to be cute.

Code:#define RETURN(x) do {if (fd != NULL) fclose﹙fd﹚; if (ptr != NULL) free(ptr); return (x);} while (0)

free or repeated fclose. If you're very unlucky, something else has been allocated at the same address, it's supposed to outlive the function, and is now subject to use-after-free.In C++, this is all typically the domain of destructors, or the more-recently specified

std::experimental::scope_exit. If you're writing C that doesn't need to be portable, there's the cleanup attribute in GCC and ClangEvaristo Verde

Smack-Fu Master, in training

My past challenges were writing in APL (to create a movie by interpolating between still images), SNOBOL / SPITBOL (to translate one FORTRAN into GE FORTRAN), and DEC assembly language (to record analog voltages / currents in millisecond timing in neurophysiologic experiments).

Way back in something like 1987-ish I was using something called DBaseII (de-base-two) on MPM machines. I'm not sure if it was by Borland or someone else. It was rubbish. It was very much a database language, in fact too much so as the other things like control structures were very lacking indeed. And I recall it only had 26 variables available and no arrays. We often had to concatenate string variables into one to prevent us running out!!

DBase II was by Ashton-Tate, I believe.Way back in something like 1987-ish I was using something called DBaseII (de-base-two) on MPM machines. I'm not sure if it was by Borland or someone else. It was rubbish. It was very much a database language, in fact too much so as the other things like control structures were very lacking indeed. And I recall it only had 26 variables available and no arrays. We often had to concatenate string variables into one to prevent us running out!!

When I was a kid, there were many advertisements for courses teaching people how to use computers. The topics covered were DBase, Lotus 123 and WordStar, along with DOS and Windows. It was taught as a user level course, not for programmers. I took one of those courses while in school and it was my first practical exposure to computers outside school. It also included some programming. We added data to the DB and set up simple forms. FoxPro was mentioned as the next step up from DBase.

First programming job was learning Fortran77 and compiling a program to read data from certs for destructive materials test coupons and print it out on reams of 132 column fanfold paper. All on a Terak CP/M machine with TWO! 8 inch floppies to work with. Had to hand enter all the test certs from paper slips too. Printer was out in the hall since it was such a noisy beast.

Next significant language was PostScript once we got a few laser printers in the office and I found out I could do vector graphics however I wanted rather than wait for some faceless company somewhere else to update their software to sort of approximate what the hardware was capable of. Then AIX dropped and came with ghostcript installed and I could to it directly on the screen.

Then we got Apache to play with and deliver DB info to the people who needed it on the screen where they needed to use it. Got a direct reply from Larry Wall when I was moaning about print() in Perl v3 (see a pattern here). The Oracle/Perl interface was (for me) a warm fuzzy place and worked wonderfully year after year. Even got the real IT team using Perl over that.

Still playing about with SVG. Inkscape actually does things that I find to be rational. Who'd a thunk?

Next significant language was PostScript once we got a few laser printers in the office and I found out I could do vector graphics however I wanted rather than wait for some faceless company somewhere else to update their software to sort of approximate what the hardware was capable of. Then AIX dropped and came with ghostcript installed and I could to it directly on the screen.

Then we got Apache to play with and deliver DB info to the people who needed it on the screen where they needed to use it. Got a direct reply from Larry Wall when I was moaning about print() in Perl v3 (see a pattern here). The Oracle/Perl interface was (for me) a warm fuzzy place and worked wonderfully year after year. Even got the real IT team using Perl over that.

Still playing about with SVG. Inkscape actually does things that I find to be rational. Who'd a thunk?

Ooh, PostScript was weird to 16 year old me who was used to Basic, Pascal, and C. Having no mentorship or guidance didn't help.Next significant language was PostScript once we got a few laser printers

Tangentially related... thought some folks here might like this video:

Of the qualifying adjectives, I learnt ZX81 BASIC (old) as a kid, learnt COBOL (old), Logo (unusual), Modula-2 (old) and Smalltalk (old) in formal education, and coded in Powerhouse 4GL (unusual) and Perl (nasty) professionally.

Prosaic-or-Poet

Wise, Aged Ars Veteran

Way back when I had a professor at MIT that was doing some work on the radar system they'd use when landing on the moon, he'd sometimes let some of us watch him work with those massive, noisy computers (and cold room) and he gave me an idea I have used a lot. He said that the first computer language was a single digit finger signal. You show the index finger in an indication of 'one' and that is a kind of computer language.

Now the idea that gave me is (these days) when I see an infant on a train or sometimes in a shopping venue and the situation appears proper and after I have made my hello to mom/pop and the infant I then do a very slow 'one' 'two' 'three' finger demonstration to the infant. 'One finger' - 'no finger' - 'Two fingers' - 'no fingers' - 'Three fingers' - 'no fingers' / Stop, and maybe can repeat. Sometimes the parents don't mind to let me do that three times.

You'd be amazed how fast the infant shows an interest. Can't say as I have ever seen recognition of the 1-2-3 signaling on the infant's face, but they sure do look interested about the second or third time. Sometimes right away.

But I got that idea from that professor who was really clear about the idea that most folks don't realize that almost all signaling with finger counting, or even flag signals used at sea, or those smoke signals of some folks and all sorts of that style signaling - - - well, he would offer his view that all those are a form of computer languages. Computer languages were being used before computers were around.

Now the idea that gave me is (these days) when I see an infant on a train or sometimes in a shopping venue and the situation appears proper and after I have made my hello to mom/pop and the infant I then do a very slow 'one' 'two' 'three' finger demonstration to the infant. 'One finger' - 'no finger' - 'Two fingers' - 'no fingers' - 'Three fingers' - 'no fingers' / Stop, and maybe can repeat. Sometimes the parents don't mind to let me do that three times.

You'd be amazed how fast the infant shows an interest. Can't say as I have ever seen recognition of the 1-2-3 signaling on the infant's face, but they sure do look interested about the second or third time. Sometimes right away.

But I got that idea from that professor who was really clear about the idea that most folks don't realize that almost all signaling with finger counting, or even flag signals used at sea, or those smoke signals of some folks and all sorts of that style signaling - - - well, he would offer his view that all those are a form of computer languages. Computer languages were being used before computers were around.

The Defense Dept. supported Hopper because "business" applications in the early 1960s were being coded in 2nd-generation assembly languages by programmers knowing no more than elementary algebra, who were in short supply. I'd done about a year of that myself, so learned COBOL in 1 week flat in 1969. Problems arose when such applications endured.

The problem being, those creaky wierd COBOL programs worked.

While it would have been pure misery pecking in code in such a verbose language on a Teletype, COBOL was extremely successful for its designed tasks. And that's why so many projects to "get rid of this old junk and replace it with something in a modern language" have failed that it's a meme all its own.

Combined with the resilient longevity of the System/360 architecture, I really respect the way COBOL code continues to run after all these years.

ABAP, however, I don't respect. After all the advancements we had in language clarity, why did the SAP folk decide to base their language on COBOL?

ABAP, however, I don't respect. After all the advancements we had in language clarity, why did the SAP folk decide to base their language on COBOL?

I think one reason that the old, extremely wordy code works so well is that at the time of its creation, the sheer effort of entry and the high (personal time, amongst other things) cost of errors meant people did a lot more rigorous work up-front, before even touching the keyboard.The problem being, those creaky wierd COBOL programs worked.

While it would have been pure misery pecking in code in such a verbose language on a Teletype, COBOL was extremely successful for its designed tasks. And that's why so many projects to "get rid of this old junk and replace it with something in a modern language" have failed that it's a meme all its own.

I know when I was being taught stuff like JSP (British computer nerds of a certain age just got a cold shiver) our lecturers were very keen on doing as much work with pen and paper as possible before heading to a computer.

10 PRINT "FARTS"

20 GOTO 10

20 GOTO 10

I think that's mostly survivor bias. If you spend enough time on computer folklore sites, you start seeing stories of software just as awful as anything produced today.I think one reason that the old, extremely wordy code works so well is that at the time of its creation, the sheer effort of entry and the high (personal time, amongst other things) cost of errors meant people did a lot more rigorous work up-front, before even touching the keyboard.

A couple of days ago, The Daily WTF ran a story about the Hewlett-Packard HP3000 minicomputer and its disastrous 1972 launch, and it mentions how no-one had noticed it had a tick counter rollover bug that would crash the system after 25 days (repeated by Microsoft in Windows 95), because it was so unstable it would crash for other reasons in less than 20 minutes.

default1024

Smack-Fu Master, in training

Ah, ok, I get it now, frame of reference. I'm coming from this from much smaller embedded C projects world. Embedded almost always using statically allocated memory, no memory to free, no files to close, you're just returning from a function call, possibly in an interrupt context or RTOS task context switch. C is still the dominant embedded programming language, I probably wouldn't use C with a resource rich environment.As mentioned previously, the problem is with any cleanup that you have to do... closing file handles, releasing memory properly, etc. If you return from multiple places, you have to make sure that you have cleanup in multiple places or else you'll leak resources. Fortunately, modern languages with various semantics help with this... 'with' statements, automatic destructor calling, etc. no matter where/when you 'return'.

Even in a static-resource environment, there might be temporary state changes that need to be reverted on function exit. Think interrupt masks; CPU modes, privilege levels or registers; locks, mutexes, semaphores, etc.Ah, ok, I get it now, frame of reference. I'm coming from this from much smaller embedded C projects world. Embedded almost always using statically allocated memory, no memory to free, no files to close, you're just returning from a function call, possibly in an interrupt context or RTOS task context switch. C is still the dominant embedded programming language, I probably wouldn't use C with a resource rich environment.

Ah, ok, I get it now, frame of reference. I'm coming from this from much smaller embedded C projects world. Embedded almost always using statically allocated memory, no memory to free, no files to close, you're just returning from a function call, possibly in an interrupt context or RTOS task context switch. C is still the dominant embedded programming language, I probably wouldn't use C with a resource rich environment.

The C I write is for embedded small CPUs, but were basically coding to MISRA standards.

Recursion and dynamic memory allocation are never allowed.

Single exit is enforced, but the work-arounds are:

1. Do your guard checks then call a worker function.

2. The often derided

do {

...

if (something) break;

...

} while false;

There are some fun and wierd embedded languages like Motorola's XGATE coprocessor language.

...

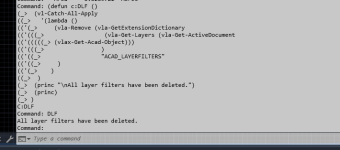

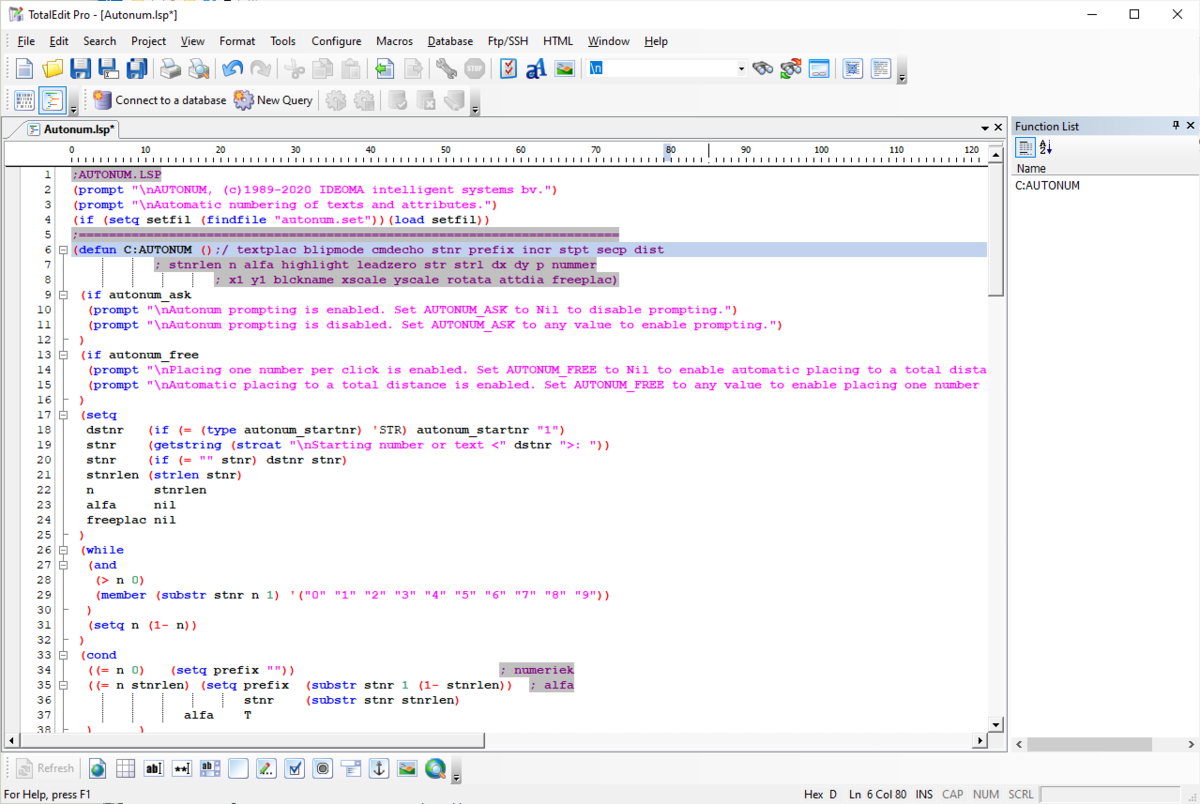

I loved Autocad's LISP scripting language, because it matched their drawing model which was lists of ojects containing lists of objects many layers deep.

...

A fun new language for Embedded / Assembly progammers is writing effects and pixel shaders.

You're programming for a GPU using vector instructions.

You only have a very limited number of variables (registers).

There is no global state.

And it's executed in parallel.

Last edited:

How many people know how old LISP really is?

But the weirdest, by far, was APL, designed by a guy called Iverson. The acronym just stands for "A Programming Language". For the un-tortured,

I could be angry with whoever had made APL the language of choice at that lab. In fact, I pity them.

But the weirdest, by far, was APL, designed by a guy called Iverson. The acronym just stands for "A Programming Language". For the un-tortured,

- It focused on sequences of values and ways to transform them. Yes, including a "permute" operator.

- It used plenty of symbols that aren't on any keyboard (and certainly aren't ASCII (and at that time (1975) Unicode was pure fantasy) so you had to type two, perhaps more, ASCII chars and hope you got them right).

- The application mostly just involved plugging floating point numbers into formulae, so FORTRAN would have been a good fit.

I could be angry with whoever had made APL the language of choice at that lab. In fact, I pity them.

Wikipedia says development started in 1958, published in a paper in 1960. Some of its key concepts are from Information Processing Language from a couple of years earlier.How many people know how old LISP really is?

In today's world it is hard to get your head around high level language programs being entered via punched cards.

The article suggests that Lisp is the second oldest HLL still in use after Fortran. I'm wondering what the usage pattern of each of these is nowadays. I've never come across Fortran other than mentions and brief descriptions in books, but am told it is still used in physics labs etc. Lisp is still used in AutoCAD and Emacs, and Scheme is used in Gnucash. The backend of Grammarly is written in Lisp. It seems that Lisp although not used by that many people is more spread out by use cases, so likely more known than Fortran. Clojure is a modern variant of Lisp. Fortran appears to just exist as newer revisions of the language - there has been no major reinterpretation of it as such. Maybe that is a sign that it did a good job already?

COBOL appeared in 1959, and that came from FLOW-MATIC which was of 1955 vintage.

The late 50s would have been such a time to be in computing!

The late 50s would have been such a time to be in computing!

For those who had previously only had assembly code as the highest level language available, the change ushered in by serious HLLs must have been nothing short of revolutionary - in terms of developing the conceptualisation of algorithms as much as in accelerating productivity of programmers.COBOL appeared in 1959, and that came from FLOW-MATIC which was of 1955 vintage.

The late 50s would have been such a time to be in computing!

Lisp is still used in AutoCAD and Emacs, and Scheme is used in Gnucash. The backend of Grammarly is written in Lisp. It seems that Lisp although not used by that many people is more spread out by use cases, so likely more known than Fortran. Clojure is a modern variant of Lisp.

Extempore is music and real-time-processing Scheme/Lisp...

I thought AutoLISP went the way of the dodo a few years back? ISTR much wailing and gnashing of teeth about it at the time. Although I could have dreamed that.Wikipedia says development started in 1958, published in a paper in 1960. Some of its key concepts are from Information Processing Language from a couple of years earlier.

In today's world it is hard to get your head around high level language programs being entered via punched cards.

The article suggests that Lisp is the second oldest HLL still in use after Fortran. I'm wondering what the usage pattern of each of these is nowadays. I've never come across Fortran other than mentions and brief descriptions in books, but am told it is still used in physics labs etc. Lisp is still used in AutoCAD and Emacs, and Scheme is used in Gnucash. The backend of Grammarly is written in Lisp. It seems that Lisp although not used by that many people is more spread out by use cases, so likely more known than Fortran. Clojure is a modern variant of Lisp. Fortran appears to just exist as newer revisions of the language - there has been no major reinterpretation of it as such. Maybe that is a sign that it did a good job already?

They replaced it with Visual Lisp - which for most people was pretty much the same thing. They added an editor with syntax highlightingI thought AutoLISP went the way of the dodo a few years back? ISTR much wailing and gnashing of teeth about it at the time. Although I could have dreamed that.

Just tried pasting from old lisp scripts straight to the command line and it still works fine.

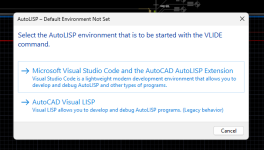

Interestingly it also now gives the option to use Visual Studio Code as the editor too - I hadn't noticed this TBH as I tend to use Notepad++ and hadn't tried invoking the editor within AutoCAD for years.

Clearly I’ve had a bit of a ChatGPT moment here.

Besides direct usage in a lot of scientific and engineering computing (which I'm directly involved in), anyone using NumPy and SciPy is using Fortran code for a substantial number of the numerical kernels under the hood.I've never come across Fortran other than mentions and brief descriptions in books, but am told it is still used in physics labs etc.

With the newly-adopted linear algebra routines in the C++ standard library, there's likely to be a similar bit of Fortran code underlying those pieces of C++ as well.

Substantial is overstating it. NumPy doesn't use it at all, the non-Python bits are mostly C, but it does provide a Fortran to Python bridge. SciPy does use it and a handful modules are essentially just Fortran code with a Python wrapper e.g. interpolation, but some of those chunks have "last modified" dates in the comments dating to the 80s. They seem more like old code that works over anything else. I work in the sciences and do encounter Fortran code every once in a while, but in my experience, Python mostly took over as the day-to-day language.

Last edited:

Coul

Some of their announcements made it sound like they were scrapping the whole thing.

That said, I don't know how many people use the IDE - it was around for years before the IDE existed and people managed fairly well. It isn't generally used for big complicated programs, so doesn't seem so necessary for it to have an IDE as some things.

Could be Autodesk's own marketing. They deprecated their IDE (in favour of VS Code), which makes sense as why develop an IDE when others out there are flexible enough to do what you need.Clearly I’ve had a bit of a ChatGPT moment here.

Some of their announcements made it sound like they were scrapping the whole thing.

That said, I don't know how many people use the IDE - it was around for years before the IDE existed and people managed fairly well. It isn't generally used for big complicated programs, so doesn't seem so necessary for it to have an IDE as some things.

Visual LISP IDE will be removed from ACAD

So if your not already aware, AutoDesk released an AutoLISP extension for Microsoft VS Code. In ACAD 2021 help directory under "What's New or Changed with AutoLISP" they drop this little tidbit Quote Obsolete Visual LISP IDE (Windows Only) - The Visual LISP (VL) IDE has been retired and will be r...

www.cadtutor.net