No CPU socket has given me as much headache such as the LGA2011 socket. Apparently there are three versions of this socket. The issue is discussed in articles such as these:

www.servethehome.com

www.servethehome.com

Moreover, the information is sometimes misleading. For example the Xeon E7 CPUs are apparently incompatible with the LGA2011 socket, and yet when you go into Intel Ark and look it up it does say that the socket is LGA2011:

ark.intel.com

ark.intel.com

It says that it is FCLGA2011 but it is actually LGA2011-1 which is a different socket.

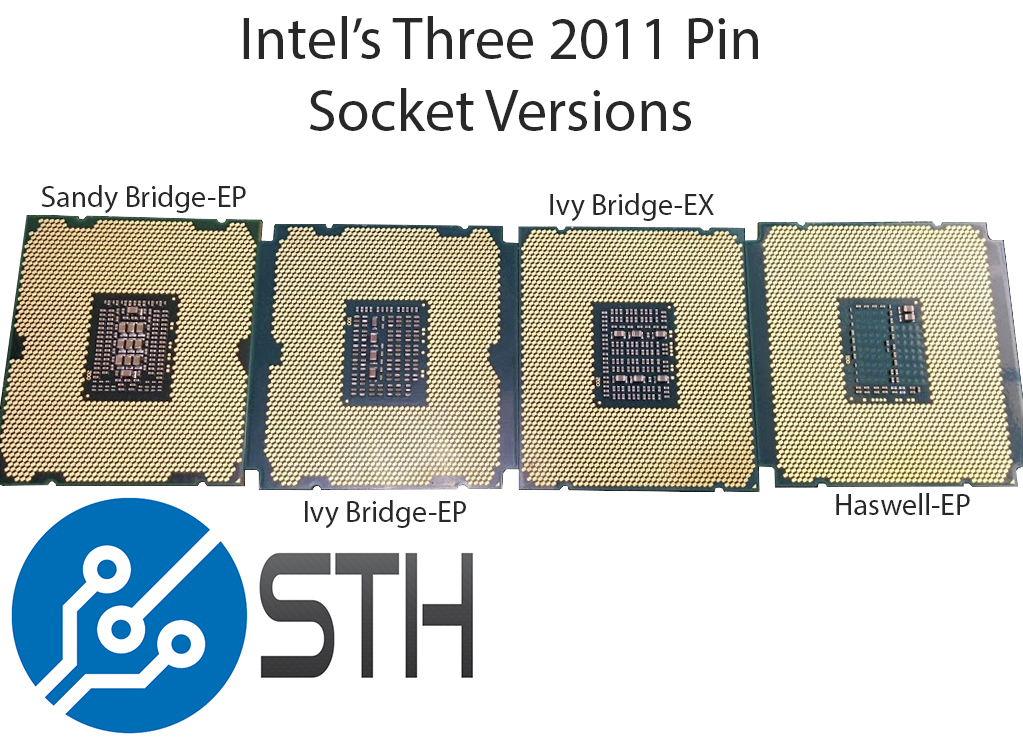

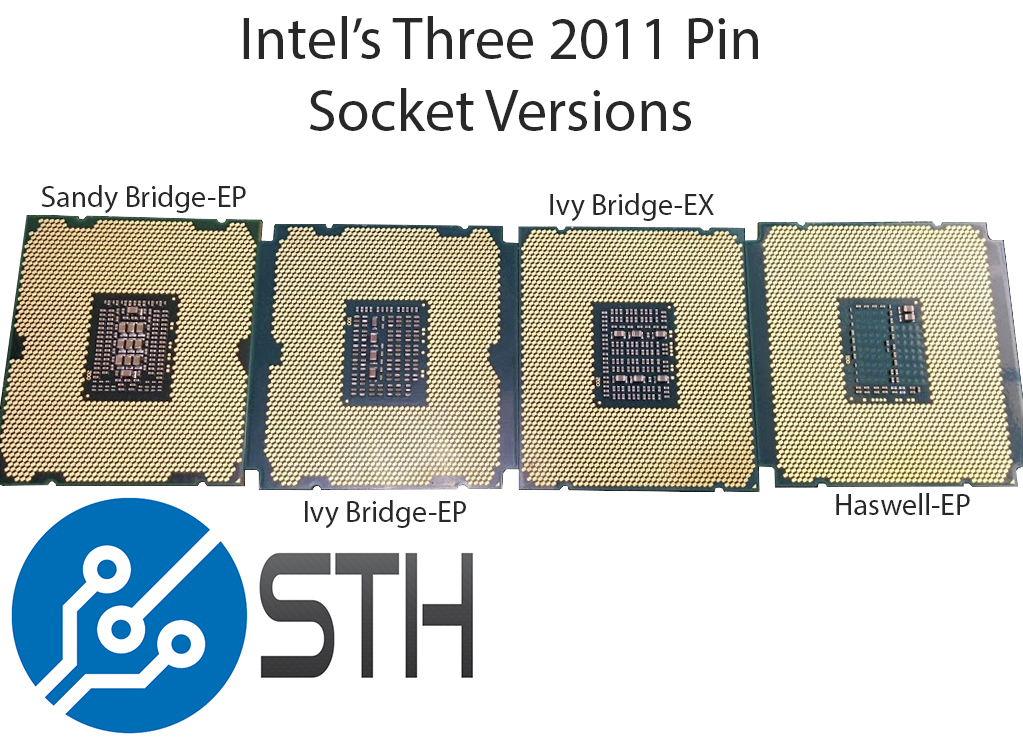

I would assume that the "2011" in the LGA2011 refers to the number of pins or "lands" used by the socket. However, when counting the pins, you will realize that the 2011, 2011-1 and 2011-3 actually have different pin counts.

As far as I understand, all these sockets fit physically with the CPUs, i.e. you can put a 2011-1 or 2011-3 CPU on an "ordinary" LGA2011 socket but it will not work as they are not electrically compatible.

The only circumstance it would make sense to have the same socket and use the same name even though the # of pins are different is to emphasize compatibility. If you put an E7 into a single socket motherboard, you may not be interested in all of its QPI links or some motherboards are not using all memory channels for the memory slots. In those cases the motherboard may choose to "ignore" such pins but can still benefit from a "high-end" CPU intended for a multisocket environment with higher memory specs. But this is not the case.

So my question is, what were the engineers at Intel thinking when designing this socket? It doesn't make any sense.

3 Different LGA 2011 pin outs: Haswell-EP Pictured Alongside Ivy Bridge-EX, Ivy Bridge-EP and Sandy Bridge-EP

3 different LGA 2011 socket configurations with Haswell-EP pictured alongside Ivy Bridge-EX, Ivy Bridge-EP and Sandy Bridge-EP processors

Moreover, the information is sometimes misleading. For example the Xeon E7 CPUs are apparently incompatible with the LGA2011 socket, and yet when you go into Intel Ark and look it up it does say that the socket is LGA2011:

Intel® Xeon® Processor E7-8890 v2 (37.5M Cache, 2.80 GHz) Product Specifications

Intel® Xeon® Processor E7-8890 v2 (37.5M Cache, 2.80 GHz) quick reference guide including specifications, features, pricing, compatibility, design documentation, ordering codes, spec codes and more.

It says that it is FCLGA2011 but it is actually LGA2011-1 which is a different socket.

I would assume that the "2011" in the LGA2011 refers to the number of pins or "lands" used by the socket. However, when counting the pins, you will realize that the 2011, 2011-1 and 2011-3 actually have different pin counts.

As far as I understand, all these sockets fit physically with the CPUs, i.e. you can put a 2011-1 or 2011-3 CPU on an "ordinary" LGA2011 socket but it will not work as they are not electrically compatible.

The only circumstance it would make sense to have the same socket and use the same name even though the # of pins are different is to emphasize compatibility. If you put an E7 into a single socket motherboard, you may not be interested in all of its QPI links or some motherboards are not using all memory channels for the memory slots. In those cases the motherboard may choose to "ignore" such pins but can still benefit from a "high-end" CPU intended for a multisocket environment with higher memory specs. But this is not the case.

So my question is, what were the engineers at Intel thinking when designing this socket? It doesn't make any sense.

Last edited: